Appearance

多模态RAG - 图片

如需转载,请联系微信群主

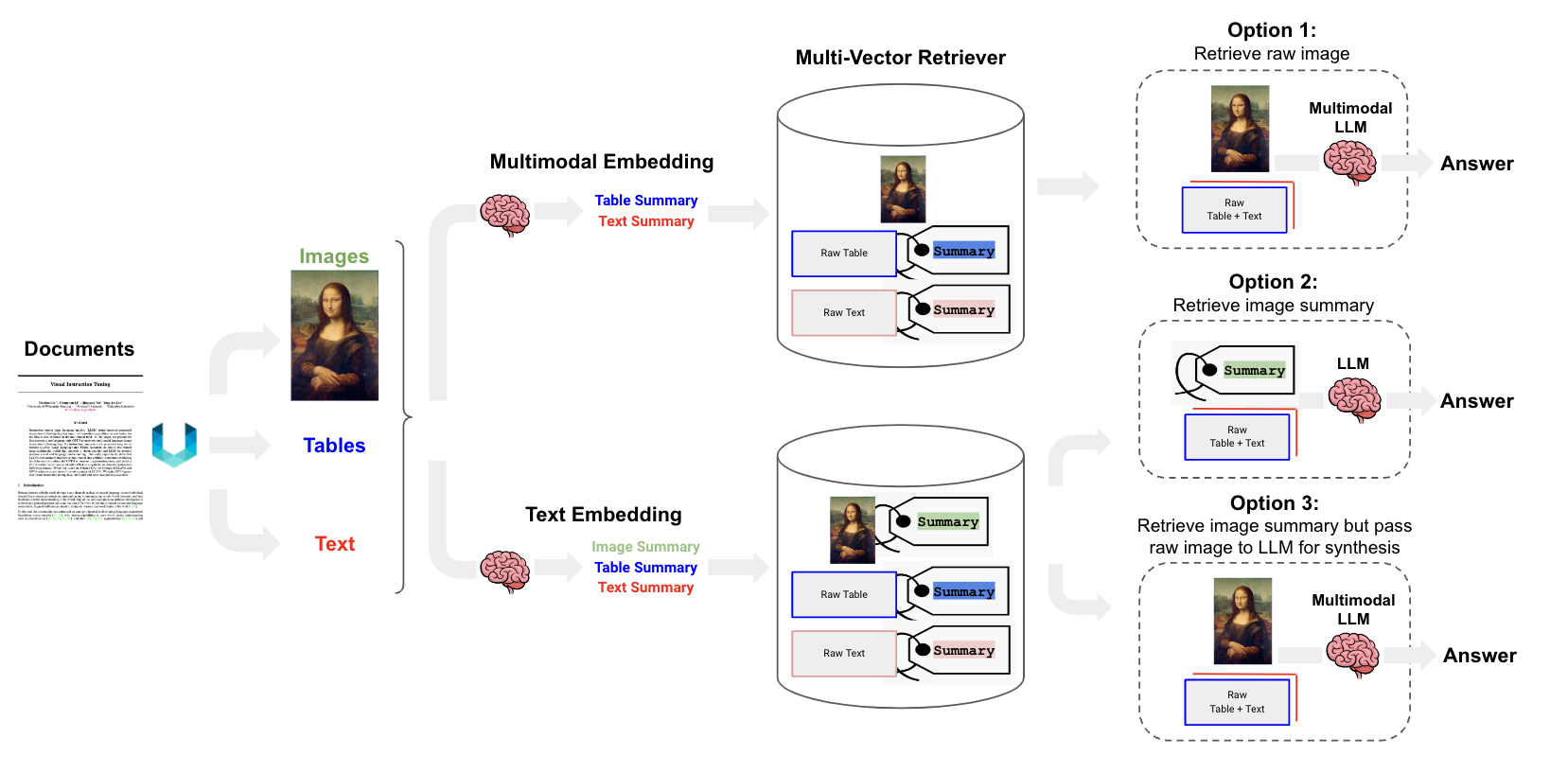

多模态(文字、表格、图片)RAG一文中,我们介绍了3种复杂PDF中图片RAG的方法。让我们来回顾一下:

接下来,我们使用第2种方式来实现图片RAG。

首先按照上一篇教程:多模态RAG实战 - 表格,文本安装相关依赖。

分割PDF中的表格和文本

我们使用:LLaVA PDF进行演示。

def extract_pdf():

raw_pdf_elements = partition_pdf(

filename=images_path + "LLaVA.pdf",

# Use layout model (YOLOX) to get bounding boxes (for tables) and find titles

# Titles are any sub-section of the document

infer_table_structure=True,

# Post processing to aggregate text once we have the title

chunking_strategy="by_title",

# Chunking params to aggregate text blocks

# Attempt to create a new chunk 3800 chars

# Attempt to keep chunks > 2000 chars

# Hard max on chunks

max_characters=4000,

new_after_n_chars=3800,

combine_text_under_n_chars=2000,

strategy="hi_res", # mandatory to use ``hi_res`` strategy

extract_images_in_pdf=True, # mandatory to set as ``True``

extract_image_block_types=["Image"], # optional, ["Image", "Table"]会将pdf中的表格也转换成图片输出

extract_image_block_to_payload=False, # optional

extract_image_block_output_dir=images_path,

)

return raw_pdf_elementspartition_pdf方法中,关键参数:

extract_images_in_pdf=True,表示提起PDF中的图片。extract_image_block_output_dir,表示图片提取后存放的目录。

总结文本和表格摘要

详见上一篇教程:多模态RAG实战 - 表格,文本。

图片摘要

def summarize_images():

global image_summaries

print("图片摘要")

# 创建LLM实例

llm = AzureChatOpenAI(

model="gpt-4o-mini",

azure_deployment="gpt-4o-mini",

model_version="2024-07-18",

api_version="2024-08-01-preview",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2

)

# 创建提示模板

prompt = ChatPromptTemplate.from_messages([

("system", "Describe the image in detail. Be specific about graphs, such as bar plots."),

("human", [

{"type": "text", "text": "Describe the image in detail."},

{"type": "image_url", "image_url": {"url": "{image}"}}

])

])

# 创建处理链

chain = prompt | llm | StrOutputParser()

image_summaries = []

# 遍历images_path下的所有图片

for image_file in os.listdir(images_path):

# 检查是否为图片文件

if image_file.lower().endswith(('.png', '.jpg', '.jpeg', '.gif')):

image_path = os.path.join(images_path, image_file)

try:

base64_image = local_image_to_data_url(image_path)

# 使用chain处理图片

summary = chain.invoke({"image": base64_image})

image_summaries.append(summary)

print(f"成功处理图片: {image_file}")

except Exception as e:

print(f"处理图片 {image_file} 时出错: {str(e)}")

print(f"共处理完成 {len(image_summaries)} 张图片")

return image_summaries核心代码:遍历PDF提取图片的目录,对每一张图片使用大模型,例如gpt-4o-mini进行总结,形成摘要。

创建检索器

def create_retriever():

global retriever, text_summaries, table_summaries, text_elements, table_elements, image_summaries

# 修改这里使用Azure OpenAI的embeddings

embeddings = AzureOpenAIEmbeddings(

model="text-embedding-3-small", # Azure上的embeddings署名称

openai_api_version="2023-05-15",

)

vectorstore = Chroma(collection_name="summaries", embedding_function=embeddings)

# 创建内存存储

store = InMemoryStore()

id_key = "doc_id"

# 初始化检索器

retriever = MultiVectorRetriever(

vectorstore=vectorstore,

docstore=store,

id_key=id_key,

)

# Add text & table summaries

# 省略

# Add image summaries

img_ids = [str(uuid.uuid4()) for _ in image_summaries]

summary_img = [

Document(page_content=s, metadata={id_key: img_ids[i]})

for i, s in enumerate(image_summaries)

]

retriever.vectorstore.add_documents(summary_img)

retriever.docstore.mset(list(zip(img_ids, image_summaries)))

print("检索器创建完成")InMemoryStore文档数据库中存储原始图片摘要。

vectorstore向量数据库中存储向量化后的图片摘要。

两者关联。随后根据用户的问题(同样向量化),从vectorstore向量数据库中找到最近似的片段,通过关联关系找到 InMemoryStore文档数据库中存储原始图片摘要,和用户问题一起交给LLM回答。

我们这里展示的是第2种图片RAG的方式,如果是使用第3种图片RAG的方式。和这里的区别在于,我们在InMemoryStore文档数据库中存储原始图片本身,而不是图片摘要。

RAG

def answer():

try:

llm = AzureChatOpenAI(

model="gpt-4o-mini",

azure_deployment="gpt-4o-mini", # or your deployment

model_version="2024-07-18",

api_version="2024-08-01-preview",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

# other params...

)

# Prompt template

template = """Answer the question based only on the following context, which can include text and tables:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# RAG pipeline

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

answer = chain.invoke("Explain images / figures with playful and creative examples.")

print("LLM回答:", answer)

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))用户的问题是:"Explain images / figures with playful and creative examples." 翻译成中文:用有趣且富有创意的例子解释图像/图形。

根据用户的问题,我们就会找到下面这张图片的摘要:

摘要:

'The image features a close-up of a tray filled with various pieces of fried chicken. The chicken pieces are arranged in a way that resembles a map of the world, with some pieces placed in the shape of continents and others as countries. The arrangement of the chicken pieces creates a visually appealing and playful representation of the world, making it an interesting and creative presentation.\n\nmain: image encoded in 865.20 ms by CLIP ( 1.50 ms per image patch'

翻译成中文:

这张照片的特写是一个装满各种炸鸡块的托盘。鸡块的排列方式类似于世界地图,有些鸡块呈大陆形状,有些则呈国家形状。鸡块的排列创造了一个视觉上吸引人且有趣的世界表现,使其成为一个有趣且富有创意的演示。将用户的问题和召回的图片摘要一起交给LLM回答,最终的返回:

'The text provides an example of a playful and creative image. The image features a close-up of a tray filled with various pieces of fried chicken. The chicken pieces are arranged in a way that resembles a map of the world, with some pieces placed in the shape of continents and others as countries. The arrangement of the chicken pieces creates a visually appealing and playful representation of the world, making it an interesting and creative presentation.'

翻译成中文:

文本提供了一个俏皮且富有创意的图像示例。该图像特写了一个装满各种炸鸡块的托盘。鸡块的排列方式类似于世界地图,有些鸡块呈大陆形状,有些则呈国家形状。鸡块的排列创造了一个视觉上吸引人且有趣的世界表现,使其成为一个有趣且富有创意的展示。加群:

扫描下方二维码加好友,添加申请填写“ai加群”,成功添加后,回复“ai加群”或耐心等待管理员邀请你入群